Can a speech enhancer expert solely on precise noisy recordings cleanly separate speech and noise—with out ever seeing paired information? A workforce of researchers from Brno Faculty of Experience and Johns Hopkins Faculty proposes Unsupervised Speech Enhancement using Data-defined Priors (USE-DDP), a dual-stream encoder–decoder that separates any noisy enter into two waveforms—estimated clear speech and residual noise—and learns every solely from unpaired datasets (clean-speech corpus and optionally obtainable noise corpus). Teaching enforces that the sum of the two outputs reconstructs the enter waveform, avoiding degenerate choices and aligning the design with neural audio codec targets.

Why that’s essential?

Most learning-based speech enhancement pipelines depend on paired clear–noisy recordings, which are pricey or unimaginable to assemble at scale in real-world circumstances. Unsupervised routes like MetricGAN-U take away the need for clear information nevertheless couple model effectivity to exterior, non-intrusive metrics used all through teaching. USE-DDP retains the teaching data-only, imposing priors with discriminators over unbiased clean-speech and noise datasets and using reconstruction consistency to tie estimates once more to the observed mixture.

The way in which it really works?

- Generator: A codec-style encoder compresses the enter audio proper right into a latent sequence; that’s break up into two parallel transformer branches (RoFormer) that think about clear speech and noise respectively, decoded by a shared decoder once more to waveforms. The enter is reconstructed as a result of the least-squares combination of the two outputs (scalars α, β compensate for amplitude errors). Reconstruction makes use of multi-scale mel/STFT and SI-SDR losses, as in neural audio codecs.

- Priors by the use of adversaries: Three discriminator ensembles—clear, noise, and noisy—impose distributional constraints: the clear division ought to resemble the clean-speech corpus; the noise division ought to resemble a noise corpus; the reconstructed mixture ought to sound pure. LS-GAN and feature-matching losses are used.

- Initialization: Initializing encoder/decoder from a pretrained Descript Audio Codec improves convergence and shutting top quality vs. teaching from scratch.

The way in which it compares?

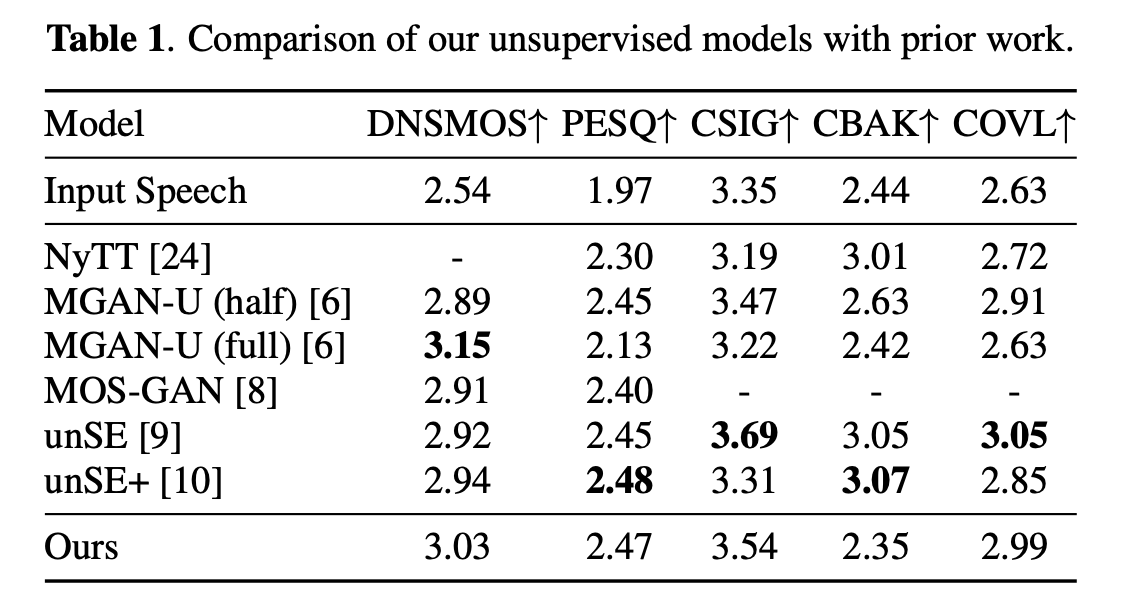

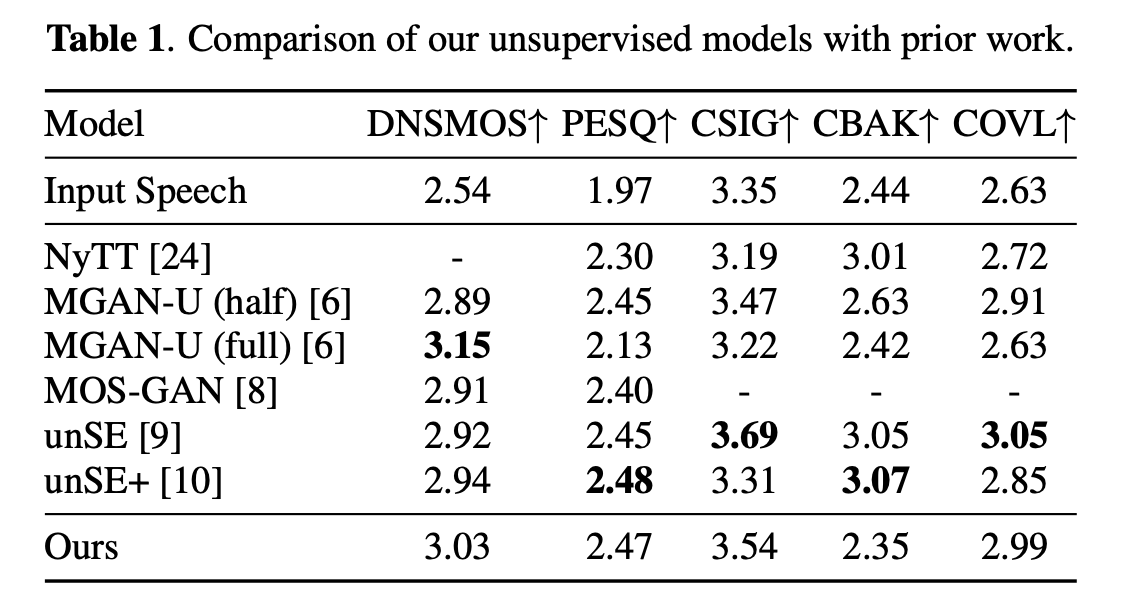

On the same old VCTK+DEMAND simulated setup, USE-DDP experiences parity with the strongest unsupervised baselines (e.g., unSE/unSE+ based mostly totally on optimum transport) and aggressive DNSMOS vs. MetricGAN-U (which straight optimizes DNSMOS). Occasion numbers from the paper’s Desk 1 (enter vs. strategies): DNSMOS improves from 2.54 (noisy) to ~3.03 (USE-DDP), PESQ from 1.97 to ~2.47; CBAK trails some baselines attributable to further aggressive noise attenuation in non-speech segments—in keeping with the categorical noise prior.

Data different shouldn’t be a factor—it’s the consequence

A central discovering: which clean-speech corpus defines the prior can swing outcomes and even create over-optimistic outcomes on simulated assessments.

- In-domain prior (VCTK clear) on VCTK+DEMAND → best scores (DNSMOS ≈3.03), nevertheless this configuration unrealistically “peeks” on the aim distribution used to synthesize the mixtures.

- Out-of-domain prior → notably lower metrics (e.g., PESQ ~2.04), reflecting distribution mismatch and some noise leakage into the clear division.

- Precise-world CHiME-3: using a “close-talk” channel as in-domain clear prior actually hurts—because of the “clear” reference itself accommodates setting bleed; an out-of-domain actually clear corpus yields bigger DNSMOS/UTMOS on every dev and check out, albeit with some intelligibility trade-off beneath stronger suppression.

This clarifies discrepancies all through prior unsupervised outcomes and argues for cautious, clear prior selection when claiming SOTA on simulated benchmarks.

The proposed dual-branch encoder-decoder construction treats enhancement as categorical two-source estimation with data-defined priors, not metric-chasing. The reconstruction constraint (clear + noise = enter) plus adversarial priors over unbiased clear/noise corpora presents a clear inductive bias, and initializing from a neural audio codec is a practical strategy to stabilize teaching. The outcomes look aggressive with unsupervised baselines whereas avoiding DNSMOS-guided targets; the caveat is that “clear prior” different materially impacts reported useful properties, so claims must specify corpus selection.

Check out the PAPER. Be comfortable to check out our GitHub Net web page for Tutorials, Codes and Notebooks. Moreover, be at liberty to look at us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you probably might be part of us on telegram as successfully.

Michal Sutter is a information science expert with a Grasp of Science in Data Science from the Faculty of Padova. With a secure foundation in statistical analysis, machine learning, and information engineering, Michal excels at reworking difficult datasets into actionable insights.

🙌 Adjust to MARKTECHPOST: Add us as a most popular provide on Google.

Elevate your perspective with NextTech Data, the place innovation meets notion.

Uncover the most recent breakthroughs, get distinctive updates, and be a part of with a world group of future-focused thinkers.

Unlock tomorrow’s traits right now: be taught further, subscribe to our e-newsletter, and transform part of the NextTech group at NextTech-news.com

Keep forward of the curve with NextBusiness 24. Discover extra tales, subscribe to our publication, and be a part of our rising group at nextbusiness24.com