MLE-STAR (Machine Finding out Engineering by the use of Search and Targeted Refinement) is a state-of-the-art agent system developed by Google Cloud researchers to automate sophisticated machine learning ML pipeline design and optimization. By leveraging web-scale search, targeted code refinement, and durable checking modules, MLE-STAR achieves unparalleled effectivity on a selection of machine learning engineering duties—significantly outperforming earlier autonomous ML brokers and even human baseline methods.

The Draw back: Automating Machine Finding out Engineering

Whereas big language fashions (LLMs) have made inroads into code period and workflow automation, present ML engineering brokers wrestle with:

- Overreliance on LLM memory: Tending to default to “acquainted” fashions (e.g., using solely scikit-learn for tabular data), overlooking cutting-edge, task-specific approaches.

- Coarse “all-at-once” iteration: Earlier brokers modify full scripts in a single shot, lacking deep, targeted exploration of pipeline components like perform engineering, data preprocessing, or model ensembling.

- Poor error and leakage coping with: Generated code is vulnerable to bugs, data leakage, or omission of equipped data info.

MLE-STAR: Core Enhancements

MLE-STAR introduces various key advances over prior choices:

1. Web Search–Guided Model Selection

Instead of drawing solely from its inside “teaching,” MLE-STAR makes use of exterior search to retrieve state-of-the-art fashions and code snippets associated to the equipped exercise and dataset. It anchors the preliminary reply in current most interesting practices, not merely what LLMs “keep in mind”.

2. Nested, Targeted Code Refinement

MLE-STAR improves its choices by the use of a two-loop refinement course of:

- Outer Loop (Ablation-driven): Runs ablation analysis on the evolving code to ascertain which pipeline component (data prep, model, perform engineering, and so forth.) most impacts effectivity.

- Inner Loop (Centered Exploration): Iteratively generates and checks variations for merely that component, using structured options.

This permits deep, component-wise exploration—e.g., extensively testing strategies to extract and encode categorical choices considerably than blindly altering all of the issues at once.

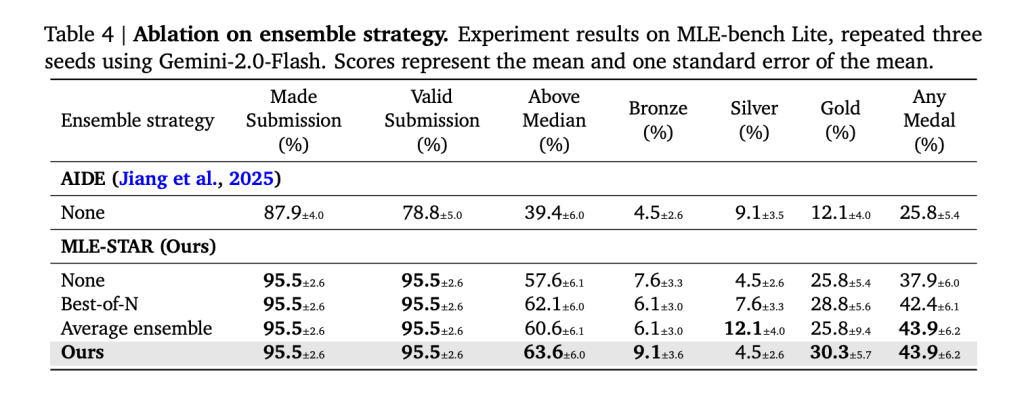

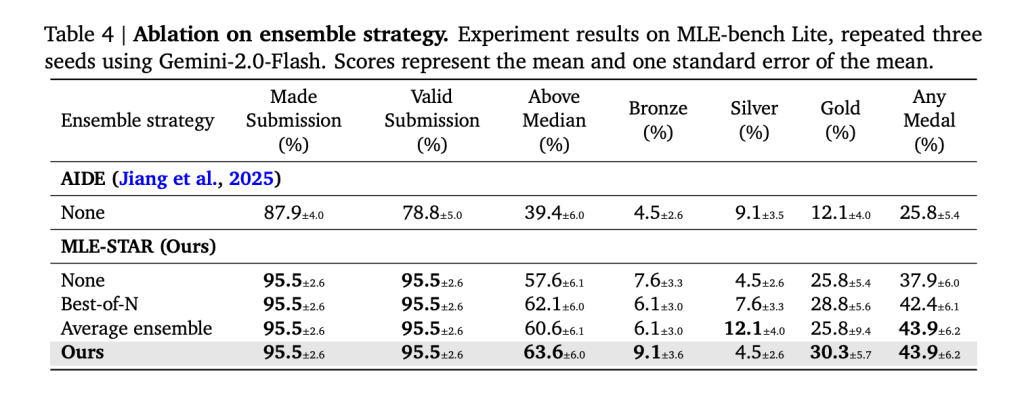

3. Self-Bettering Ensembling Approach

MLE-STAR proposes, implements, and refines novel ensemble methods by combining various candidate choices. Considerably than merely “best-of-N” voting or straightforward averages, it makes use of its planning abilities to find superior strategies (e.g., stacking with bespoke meta-learners or optimized weight search).

4. Robustness by the use of Specialised Brokers

- Debugging Agent: Mechanically catches and corrects Python errors (tracebacks) until the script runs or most makes an try are reached.

- Data Leakage Checker: Inspects code to forestall data from verify or validation samples biasing the teaching course of.

- Data Utilization Checker: Ensures the reply script maximizes the utilization of all equipped data info and associated modalities, bettering model effectivity and generalizability.

Quantitative Outcomes: Outperforming the Space

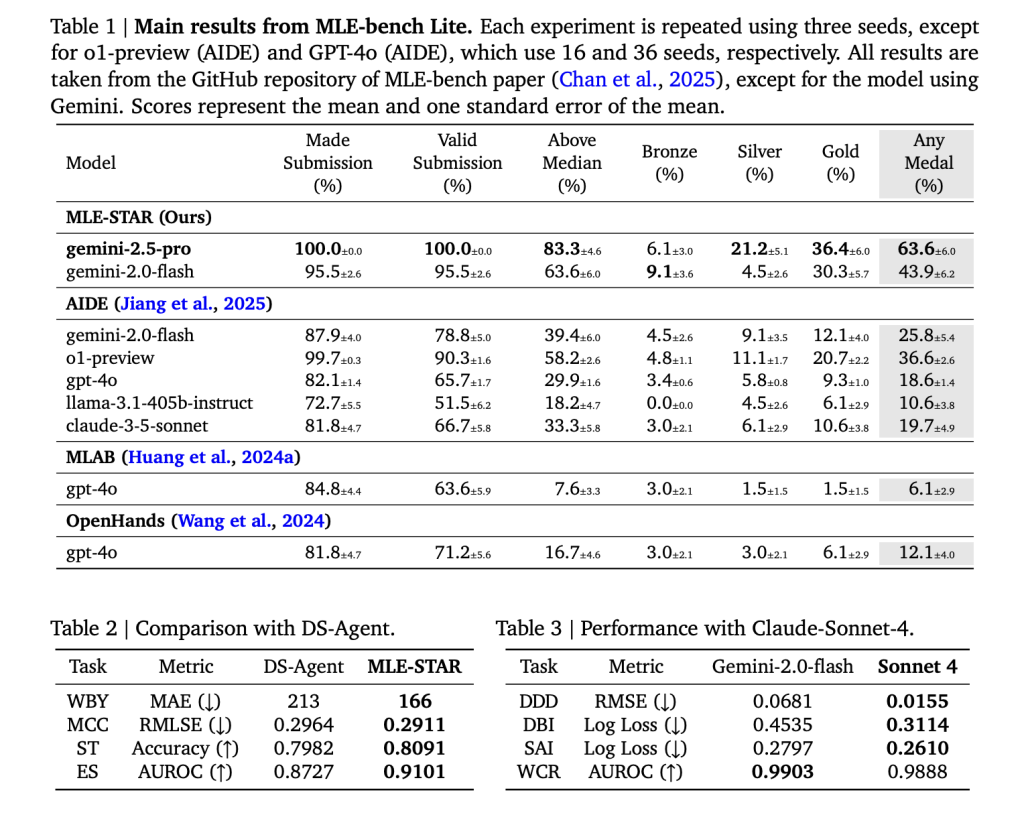

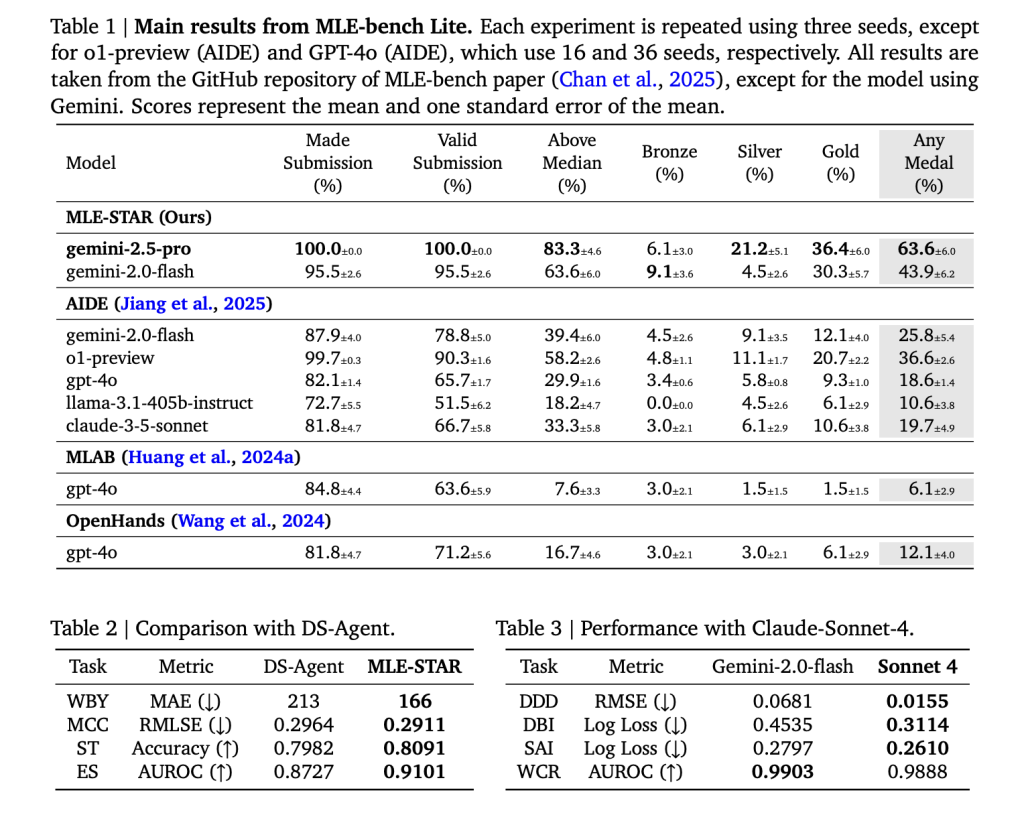

MLE-STAR’s effectiveness is rigorously validated on the MLE-Bench-Lite benchmark (22 troublesome Kaggle competitions spanning tabular, image, audio, and textual content material duties):

| Metric | MLE-STAR (Gemini-2.5-Skilled) | AIDE (Best Baseline) |

|---|---|---|

| Any Medal Charge | 63.6% | 25.8% |

| Gold Medal Charge | 36.4% | 12.1% |

| Above Median | 83.3% | 39.4% |

| Reputable Submission | 100% | 78.8% |

- MLE-STAR achieves higher than double the pace of “medal” (top-tier) choices as compared with earlier most interesting brokers.

- On image duties, MLE-STAR overwhelmingly chooses stylish architectures (EfficientNet, ViT), leaving older standbys like ResNet behind, straight translating to higher podium costs.

- The ensemble approach alone contributes an additional enhance, not merely deciding on nevertheless combining worthwhile choices.

Technical Insights: Why MLE-STAR Wins

- Search as Foundation: By pulling occasion code and model taking part in playing cards from the web at run time, MLE-STAR stays rather more up to date—mechanically along with new model kinds in its preliminary proposals.

- Ablation-Guided Focus: Systematically measuring the contribution of each code section permits “surgical” enhancements—first on in all probability probably the most impactful objects (e.g., targeted perform encodings, superior model-specific preprocessing).

- Adaptive Ensembling: The ensemble agent doesn’t merely widespread; it intelligently checks stacking, regression meta-learners, optimum weighting, and additional.

- Rigorous Safety Checks: Error correction, data leakage prevention, and full data utilization unlock rather a lot higher validation and verify scores, avoiding pitfalls that journey up vanilla LLM code period.

Extensibility and Human-in-the-loop

MLE-STAR might be extensible:

- Human consultants can inject cutting-edge model descriptions for faster adoption of the latest architectures.

- The system is constructed atop Google’s Agent Development Gear (ADK), facilitating open-source adoption and integration into broader agent ecosystems, as confirmed throughout the official samples.

Conclusion

MLE-STAR represents an actual leap throughout the automation of machine learning engineering. By imposing a workflow that begins with search, checks code by the use of ablation-driven loops, blends choices with adaptive ensembling, and polices code outputs with specialised brokers, it outperforms prior art work and even many human rivals. Its open-source codebase signifies that researchers and ML practitioners can now mix and extend these state-of-the-art capabilities of their very personal initiatives, accelerating every productiveness and innovation.

Check out the Paper, GitHub Net web page and Technical particulars. Be pleased to check out our GitHub Net web page for Tutorials, Codes and Notebooks. Moreover, be pleased to adjust to us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Publication.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is devoted to harnessing the potential of Artificial Intelligence for social good. His latest endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth safety of machine learning and deep learning info that’s every technically sound and easily understandable by a big viewers. The platform boasts of over 2 million month-to-month views, illustrating its popularity amongst audiences.

Elevate your perspective with NextTech Data, the place innovation meets notion.

Uncover the latest breakthroughs, get distinctive updates, and be part of with a worldwide group of future-focused thinkers.

Unlock tomorrow’s traits instantly: study further, subscribe to our e-newsletter, and develop to be part of the NextTech group at NextTech-news.com

Keep forward of the curve with NextBusiness 24. Discover extra tales, subscribe to our e-newsletter, and be part of our rising group at nextbusiness24.com